2025 saw an interesting clash of two AI narratives particularly within manufacturing. On one side, we had AI-native product companies arguing about the potential fallacy of slapping AI onto legacy systems. Their primary contention was that legacy systems designed for a bygone era are ill-suited to run in tandem with the fast-paced real-time AI agents. Instead of bolting on to the edges, manufacturers must embed AI right into the core of their systems.

On the other side, we had integration experts openly advocating the immense benefits of AI overlays on top of the existing systems. They quoted quick wins manufacturers could gain from bolt-on accelerators such as Power BI quick insights, anomaly detectors or operator assist agents. Product companies, however, countered that many pilot-level successes highlighted by integration experts often wane when scaled across the enterprise.

Both sides acknowledge their own strengths and shortcomings. For example, the AI-native product companies, who argue for a complete rebuild, openly admit that it would be detrimental if manufacturers opt for a big-bang overhaul. They believe manufacturers should incrementally rebuild and redesign their processes.

But time is of the essence, warn the integration experts. They advise that while manufacturers gradually embed intelligence within their core systems, they can allow AI to operate between systems. Let AI live in the bridges between systems. AI overlays are those intelligent bridges that manufacturers will depend on at least for the next decade since they provide the fastest and least disruptive path to impact.

At Trigent, having worked with diverse manufacturers in recent years, we believe in striking a balance between these two narratives. For one, AI must work in tandem with the legacy systems. It must be deployed without requiring a fundamental rebuild or disrupting the status quo. If this is one prerequisite, then the other criterion is how deep can we embed AI into the legacy systems.

This is what helped us arrive at our AI-deployment matrix that serves as a mental model to approach AI solutions in 2026.

We took two primary variables into consideration:

- Cross-system dependencies: The higher the dependency, the higher would be the solution complexity.

- The cost of failure: What is the business impact of AI crashing or being wrong? Can we live with it or go for a quick rollback? Will the aftermath be heavy in terms of financial, operational or reputational impacts?

Let’s dive right into the AI-deployment matrix and study the corresponding solution in each quadrant.

Fig 1: Trigent’s AI Deployment Matrix

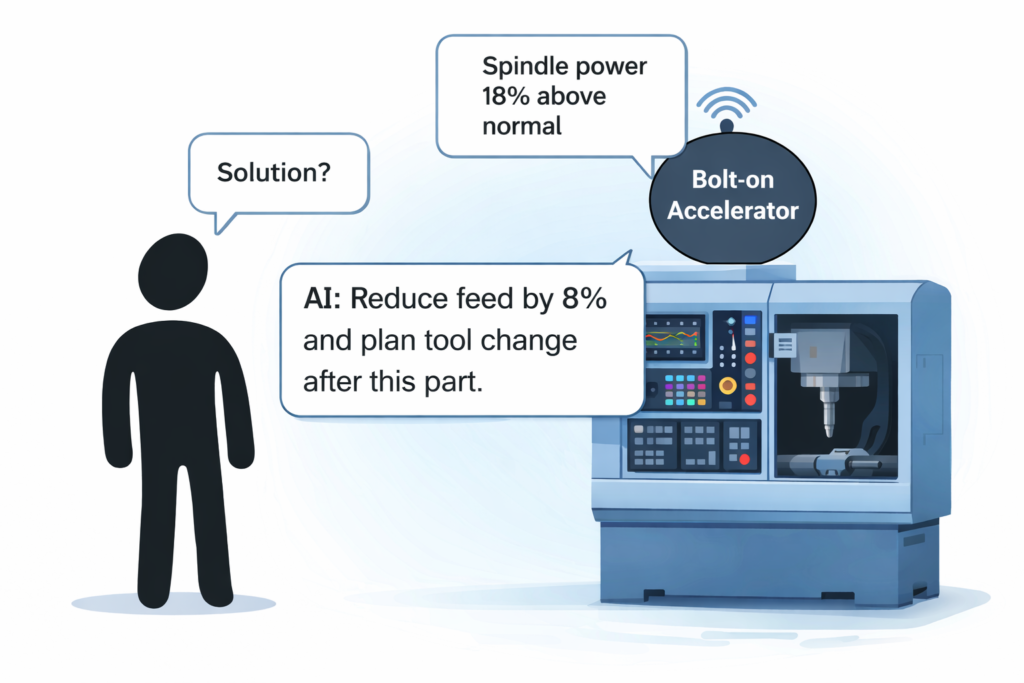

Quadrant 1: Bolt-on Accelerators (Low cross-system dependency, low cost of failure)

In 2025, bolt-on accelerators saw rapid deployment. The generative copilots and assistants helped minimize machine downtime, significantly improving workforce productivity. For example, the copilots were bolted-on to machines to monitor their performance, trigger alerts in case of anomaly spikes, and help workers troubleshoot problems through a chat interface.

What’s worth noting here is that these generative AI assistants lowered the dependency on the aging workforce comprising highly experienced technicians with years of hands-on knowledge. These agents also helped retain and democratize knowledge across the enterprise. Elsewhere, generative AI assistants helped accelerate product development, enabling engineering teams to create and iterate designs in minutes.

So if you observe closely, Q1 mostly has generative AI solutions deployed in an assistive capacity. They have high tolerance for failure (mistakes are correctable, because of the human-in-the-loop workflows) and are tied to one machine or a single task (there are no cross-system dependencies).

Quadrant 2: Cross-system Dashboards (High cross-system dependency, low cost of failure)

Another type of AI solution that continues to garner much interest is the cross-system dashboards, which organize data from machines, sensors, and workers to give easy-to-read, instantly-available breakdowns.

These dashboards are implemented in three ways:

- Centralization

You physically store the data in one unified data lake or warehouse architecture, from which it is queried and presented through dashboards. This is used for historical analysis or regulatory reporting. Centralized dashboards are a perfect fit for high-level plant or shopfloor overviews.

- Federation

Instead of collating data from ERP, MES, SCADA systems, the federated dashboard uses API to query live data from these systems. It is relatively faster to implement than centralized dashboards, optimal for insights on daily production and for comparing targets vs actuals.

- Event-driven Analytics

Event-driven analytics dashboard is faster to implement than federated or centralized dashboards. Instead of pulling data from sources, it subscribes to state changes in your systems and provides insights on how it would impact your shopfloor performance. In other words, it listens to changes in events like machines stopped at MES, maintenance delayed from CMMS, order at risk from ERP, and feeds these data points into an AI model. The model in turn provides predictive insights such as the likelihood of a machine downtime, the number of orders missing SLA as a result of the downtime, or the percentage increase in backlog owing to the delay.

Quadrant 3: Prebuilt Composable AI-native Modules (Low cross-system dependency, high cost of failure)

Prebuilt, Modular, Composable AI solutions

The prebuilt modules are focused independent components that can grow, change, and even be replaced without disrupting the whole system. In other words, they are arranged in a composable architecture designed to solve a high impact problem within a domain. The modules are restricted to a particular domain, serving as the core to the domain operation, such that even small errors can lead to significant operational disruption.

Let’s dive a little deeper into two of these modules to understand how these automated solutions function with AI embedded at their core.

The Order-to-Cash Module: This enables customers to place orders and complete payments seamlessly. It also automatically syncs with accounting systems, helping accelerate cash flows. It is built from several sub-modules including multi-currency support, automated accounting integration with tools like QBO, and bulk ordering capabilities.

The intelligence layer comes into play during order processing. When an order is placed, the embedded AI predicts fulfillment confidence and recommends whether to accept the order in full, accept it partially, delay acceptance, or reject it altogether. From the customer’s perspective, the system suggests appropriate payment options such as prepayment, immediate payment, or deferred payment based on factors like customer history and currency volatility.

In essence, intelligence is embedded directly into the order fulfillment, payment, and accounting synchronization workflows.

The Inventory-to-Fulfillment Module: This is ideal if you need real-time control of multi-warehouse distribution. Some of the sub-modules include:

- location management that pinpoints the exact physical spot of your item within the warehouse.

- Multi-warehouse support that determines the optimal warehouse to source each order from.

- Lot and batch tracking for precise recalls in the event of compliant issues.

- Zone tracking to streamline picking operations.

- Receiving and putaway workflows that ensure incoming goods are stored in the most appropriate bins to accelerate downstream fulfillment.

In this case, where does the intelligence reside? The AI essentially lives in stock transfer, replenishment and picking logic. For instance, when the system detects a demand spike or dip, the AI auto-suggests inter-warehouse transfer orders before stockouts or excess inventory happen. During fulfillment, The AI dynamically adjusts pick sequences by factoring in expiry data, shortest travel, and least congestion paths.

You can check out the rest of the prebuilt customizable modules here.

Quadrant 4: Cross-System Connectors (High cross-system dependency, high cost of failure)

Notably, Quadrant 1 and 2 are read-only solutions. AI doesn’t write into the systems, only reads machine data, triggers notifications, tickets or recommendations, and does not act on its own. In both the quadrants, AI is advisory. However in quadrant 3, AI doesn’t just read, it starts committing changes, adjusting the operational state of systems within a particular domain. Observe that these systems are AI-native.

In quadrant 4, the writing or altering the state of the systems extends to legacy machines, with the AI directly interacting with existing systems (ERP, MES, PLC). This interaction (read & write) happens in two ways:

- AI on top of your legacy machine. You have a front-end through which humans can provide their inputs in natural language. The AI (LLM) feeds this information to the legacy system through the integration middleware. The system changes are then relayed back to the humans through the AI.

- AI between legacy machines. One of our predictions for 2026 is that manufacturers will increasingly adopt an integration suite of connectors for their ERP, MES, SFC, SCM, SCADA, and EDI systems. The AI embedded within these connectors will coordinate automation actions across systems. For example, when your SCM or EDI system receives a late shipment notice from one of your key suppliers, the AI in the middle will automatically resequence jobs in APS, adjust ERP capacity and promise dates, before triggering a purchasing expediting workflow with an alternative supplier.

Manufacturing AI at the Inflection Point in 2026

In 2026, Quadrant 1 and Quadrant 2 solutions are expected to scale rapidly across the manufacturing landscape. Having been extensively piloted in 2025 and proven to deliver sizable gains, these solutions are set to transition from experimentation to active widespread deployment. Meanwhile, Quadrant 3 and Quadrant 4 are already gaining steady traction among both mid-market and large manufacturers. Together, these trends signal 2026 as the inflection point when manufacturing AI moves beyond isolated quick wins to deliver sustained enterprise-scale impact.